Scientists Working on Continual Learning to Overcome ‘Catastrophic Forgetting’

By John P. Desmond, AI Trends Editor Algorithms are trained on a dataset and may not be capable of learning new information without retraining, as opposed to the human brain, which learns constantly and builds on knowledge over time. An artificial neural network that forgets previously learned information upon learning new information, is demonstrating what is called […]

By John P. Desmond, AI Trends Editor

Algorithms are trained on a dataset and may not be capable of learning new information without retraining, as opposed to the human brain, which learns constantly and builds on knowledge over time.

An artificial neural network that forgets previously learned information upon learning new information, is demonstrating what is called “catastrophic forgetting.”

In an effort to nudge AI to work more like the human brain in this regard, a team of AI and neuroscience researchers have banded together to form ContinualAI, a nonprofit organization and open community of AI continual learning (CL) enthusiasts, described in a recent account in VentureBeat.

ContinualAI recently announced Avalanche, a library of tools compiled over the course of a year from over 40 contributors with the goal of making CL research easier and more reproducible. The organization was launched three years ago and has attracted support for its mission to advance CL, which its members see as fundamental for the future of AI.

“ContinualAI was founded with the idea of pushing the boundaries of science through distributed, open collaboration,” stated Vincenzo Lomonaco, cofounding president of the organization and assistant professor at the University of Pisa in Italy. “We provide a comprehensive platform to produce, discuss, and share original research in AI. And we do this completely for free, for anyone.”

OpenAI research scientist Jeff Clune, who helped to cofound Uber AI Labs in 2017, has called catastrophic forgetting the “Achilles’ heel” of machine learning. In an effort to address it, he published a paper last year detailing ANML (A Neuromodulated Meta-Learning Algorithm), an algorithm that managed to learn 600 sequential tasks with minimal catastrophic forgetting by “meta-learning” solutions to problems instead of manually engineering solutions. Separately, Alphabet’s DeepMind published research in 2017 suggesting that catastrophic forgetting isn’t an insurmountable challenge for neural networks.

Still, the answer is elusive. “The potential of continual learning exceeds catastrophic forgetting and begins to touch on more interesting questions of implementing other cognitive learning properties in AI,” stated Keiland Cooper, a cofounding member of ContinualAI and a neuroscience research associate at the University of California, Irvine, in an interview with VentureBeat. As an example, he mentioned transfer learning, a machine learning method where a model developed for a task is reused as the starting point for a model on a second task. In deep learning, pretrained models are used as a starting point on computer vision and NLP tasks, owing to the vast compute and time resources to develop neural network models on these problems.

Continual AI has grown to over 1,000 members since its founding. “While there has been a renewed interest in continual learning in AI research, the neuroscience of how humans and animals can accomplish these feats is still largely unknown,” Cooper stated. He sees the organization as being in a good position to enable more interaction with AI research, cognitive scientists, and neuroscientists to collaborate.

Google DeepMind Sees a Non-Stationary World

Raia Hadsel is a research scientist at Google DeepMind in London, whose research focus includes continual and transfer learning. “Artificial intelligence research has seen enormous progress over the past few decades, but it predominantly relies on fixed datasets and stationary environments,” she stated as lead author of a paper on continual learning published last December in Trends in Cognitive Science. “Continual learning is an increasingly relevant area of study that asks how artificial systems might learn sequentially, as biological systems do, from a continuous stream of correlated data.”

A benchmark for success in AI is the ability to emulate human learning such as for recognizing images, playing games or driving a car. “We then develop machine learning models that can match or exceed these given enough training data. This paradigm puts the emphasis on the end result, rather than the learning process, and overlooks a critical characteristic of human learning: that it is robust to changing tasks and sequential experience.”

The world is non-stationary, unlike frozen machine learning models, “so human learning has evolved to thrive in dynamic learning settings,” the authors state. “However, this robustness is in stark contrast to the most powerful modern machine learning methods, which perform well only when presented with data that are carefully shuffled, balanced, and homogenized. Not only do these models underperform when presented with changing or incremental data regimes, in some cases they fail completely or suffer from rapid performance degradation on earlier learned tasks, known as catastrophic forgetting.”

Facebook Releases Open Source Models to Further CL Research

Thus, work is ongoing to make AI smarter. Recent research from Facebook describes a new open source benchmark and model for continual learning, called CTrL.

“We expect that CL models will require less supervision, sidestepping one of the most significant shortcomings of modern AI systems: their reliance on large human-labeled data sets,” states lead author Marc’Aurelio Ranzato, research scientist, in the post on the Facebook AI Research blog.

The model aims to measure how well knowledge is transferred between two tasks, how well the CL model retains previously learned skills (thus avoiding catastrophic forgetting) and how it scales to a large number of tasks. “Until now, there has been no effective standard benchmark for evaluating CL systems across these axes,” the author states. The research was conducted in collaboration with Sorbonne University of Paris, France.

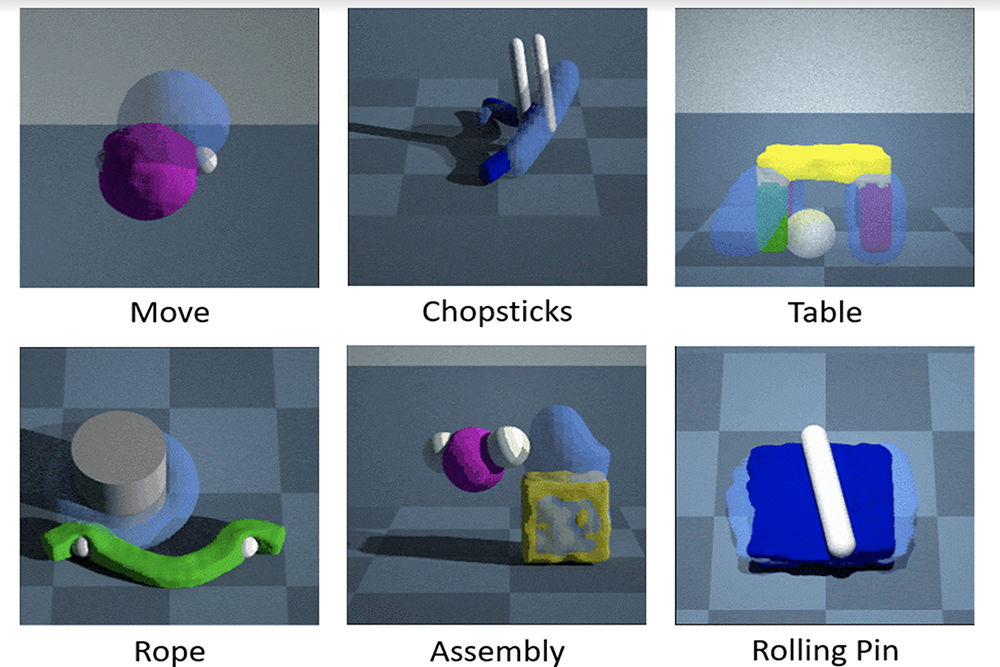

CTrL works by evaluating the amount of knowledge transferred from a sequence of observed tasks, thus the model’s ability to transfer to similar tasks. The benchmark proposes numerous streams of tasks to assess multiple dimensions of transfer, and a long sequence of tasks for assessing the ability of CL models to scale.

The researchers also propose a new model, called Modular Networks with Task-Driven Priors (MNTDP), that when confronting new tasks, determines which previously learned models can be applied.

“We’ve long been committed to the principles of open science, and this research is still in its early stages, so we’re excited to work with the community on advancing CL,” the authors state.

Read the source articles and information in VentureBeat, in Trends in Cognitive Science and on the Facebook AI Research blog.