HAZARD - Embodied decision making in dynamically changing environments

Recent advances in high-fidelity virtual environments serve as one of the major driving forces for building intelligent embodied agents to perceive, reason and interact with the physical world.

Recent advances in high-fidelity virtual environments serve as one of the major driving forces for building intelligent embodied agents to perceive, reason and interact with the physical world. Typically, these environments remain unchanged unless agents interact with them. However, in real-world scenarios, agents might also face dynamically changing environments characterized by unexpected events and need to rapidly take action accordingly. To remedy this gap, we propose a new simulated embodied benchmark, called HAZARD, specifically designed to assess the decision-making abilities of embodied agents in dynamic situations. HAZARD consists of three unexpected disaster scenarios, including fire, flood, and wind, and specifically supports the utilization of large language models (LLMs) to assist common sense reasoning and decision-making.

This benchmark enables us to evaluate autonomous agents’ decision-making capabilities across various pipelines, including reinforcement learning (RL), rule-based, and search-based methods in dynamically changing environments. As a first step toward addressing this challenge using large language models, we further develop an LLM-based agent and perform an in-depth analysis of its promise and challenge of solving these challenging tasks.

You can download the full paper below.

1 INTRODUCTION OF HAZARD

Embodied agents operate in a dynamic world that exhibits constant changes. This world experiences various changes at every moment, including the rising and setting of the sun, the flow of rivers, weather variations, and human activities. To successfully navigate and function in such an ever-changing environment, robots are required to perceive changes in their surroundings, reason the underlying mechanisms of these changes, and subsequently make decisions in response to them.

To simulate a dynamic world, it is necessary to create environments that can spontaneously undergo changes. Currently, various simulation platforms have emerged in the field of embodied AI, including iGibson (Shen et al., 2021), Habitat (Savva et al., 2019), SAPIEN (Xiang et al., 2020b), Virtual- Home (Puig et al., 2018), AI2THOR (Kolve et al., 2017), ThreeDWorld (TDW) (Gan et al.), etc. Existing tasks on these simulation platforms involve agent exploration and agent-driven interactions, but they lack support for environment-driven changes, which are rather influential and unpredictable. The iGibson 2.0 (Li et al.) platform partially supports spontaneous environmental changes to a limited extent, but these changes are limited to the propagation of a few variables between individual objects.

In this paper, we propose the HAZARD challenge, an innovative exploration of embodied decision- making in dynamic environments, by designing and implementing new capabilities for physical simulation and visual effects on top of the ThreeDWorld. HAZARD manifests itself in the form of unexpected disasters, such as fires, floods, and wild winds, and requires agents to rescue valuable items from these continuously evolving and perilous circumstances.

The HAZARD challenge places agents within indoor or outdoor environments, compelling them to decipher disaster dynamics and construct an optimal rescue strategy. As illustrated in Figure 1, the ˚Qinhong Zhou and Sunli Chen contribute equally.

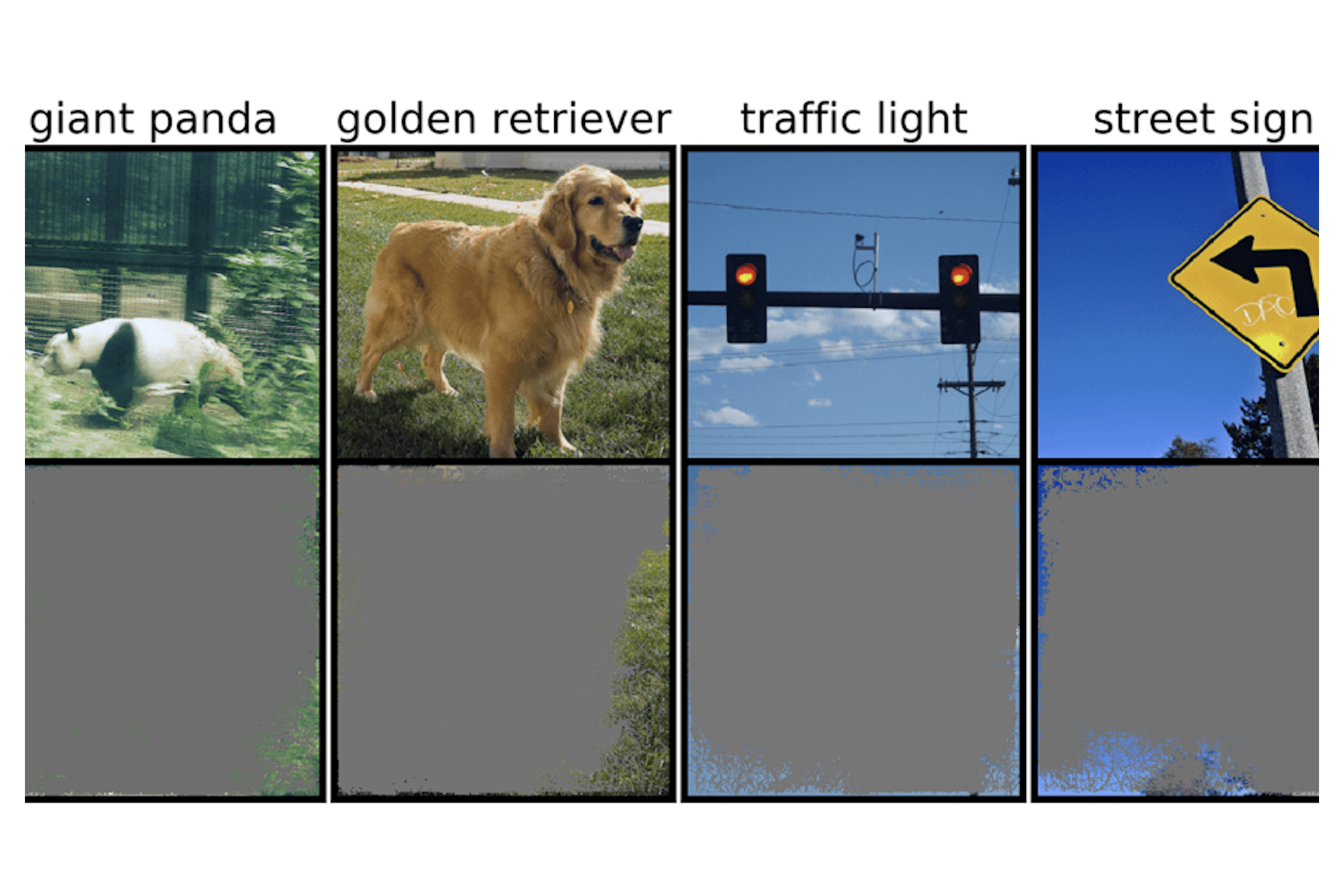

FIGURE 1 - ILLUSTRATION OF HAZARD

The HAZARD challenge consists of three dynamically changing scenarios: fire, flood, and wind. In the fire scenario, flames continuously spread and burn objects. In the flood scenario, water spreads and rises, washing away objects and causing damage to non-waterproof objects. The wind scenario poses the challenge of objects being blown away, making them hard to reach. These scenarios present embodied agents with complex perception, reasoning, and planning challenges.

Scenarios vary in severity and complexity. An indoor fire scenario might involve the rapid spread of flames, threatening flammable target objects. In an indoor flood scenario, an overwhelming volume of water inundates the house, jeopardizing non-waterproof targets. In an outdoor wind scenario, strong winds scatter lightweight objects across roads, making retrieval a challenging task for agents. To successfully rescue target objects from these disasters, agents must effectively transfer them to safe zones such as backpacks or shopping carts.

To facilitate this endeavor, we introduce a comprehensive benchmark comprising these disaster scenarios, complete with quantitative evaluation metrics. We also provide an API to employ large language models (LLMs) for action selection. This API integrates visual observations and historical memories into textual descriptions, thereby providing a semantic understanding of the dynamic environment. To optimize the use of LLMs, we compress a large volume of low-level actions by A* algorithm, significantly reducing the frequency of LLM queries.

We evaluate both LLM-based agents and several other decision-making pipelines on our benchmark, including a rule-based pipeline that operates based on a simple set of rules, a search-based pipeline that utilizes the Monte Carlo tree search (MCTS) algorithm for action selection, and a reinforcement learning-based pipeline. Through our experiments, we find while the LLM pipeline is capable of understanding and considering certain basic factors, such as object distance, it may encounter challenges in comprehending and effectively handling more complex factors, such as the dynamic nature of environmental changes.

The main contributions of our work are: 1) designing and implementing a new feature that enables the simulation of complex fire, flood, and wind effects for both indoor and outdoor virtual environments in TDW; 2) developing a comprehensive benchmark, HAZARD, for evaluating embodied decision- making in dynamically changing environments, as well as incorporating the LLM API into our benchmark; and 3) conducting an in-depth analysis of the challenges posed by perception and reasoning for existing methods, especially LLM-based agents in tackling the proposed benchmark.

Download the full paper below: