NVIDIA Advances Robot Learning and Humanoid Development With New AI and Simulation Tools

Robotics developers can greatly accelerate their work on AI-enabled robots, including humanoids, using new AI and simulation tools and workflows that NVIDIA revealed at the Conference for Robot Learning (CoRL) in Munich, Germany.

Robotics developers can greatly accelerate their work on AI-enabled robots, including humanoids, using new AI and simulation tools and workflows that NVIDIA revealed this week at the Conference for Robot Learning (CoRL) in Munich, Germany.

The lineup includes the general availability of the NVIDIA Isaac Lab robot learning framework; six new humanoid robot learning workflows for Project GR00T, an initiative to accelerate humanoid robot development; and new world-model development tools for video data curation and processing, including the NVIDIA Cosmos tokenizer and NVIDIA NeMo Curator for video processing.

The open-source Cosmos tokenizer provides robotics developers superior visual tokenization by breaking down images and videos into high-quality tokens with exceptionally high compression rates. It runs up to 12x faster than current tokenizers, while NeMo Curator provides video processing curation up to 7x faster than unoptimized pipelines.

Also timed with CoRL, NVIDIA presented 23 papers and nine workshops related to robot learning and released training and workflow guides for developers. Further, Hugging Face and NVIDIA announced they’re collaborating to accelerate open-source robotics research with LeRobot, NVIDIA Isaac Lab and NVIDIA Jetson for the developer community.

Accelerating Robot Development With Isaac Lab

NVIDIA Isaac Lab is an open-source, robot learning framework built on NVIDIA Omniverse, a platform for developing OpenUSD applications for industrial digitalization and physical AI simulation.

Developers can use Isaac Lab to train robot policies at scale. This open-source unified robot learning framework applies to any embodiment — from humanoids to quadrupeds to collaborative robots — to handle increasingly complex movements and interactions.

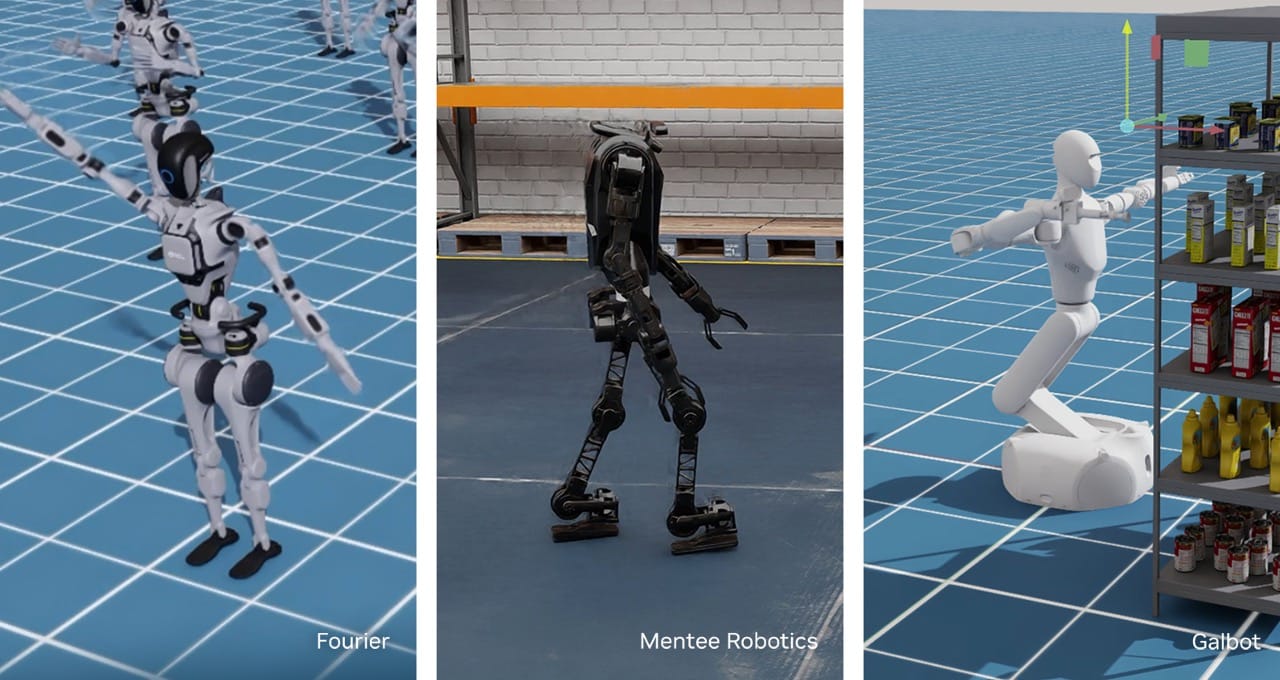

Leading commercial robot makers, robotics application developers and robotics research entities around the world are adopting Isaac Lab, including 1X, Agility Robotics, The AI Institute, Berkeley Humanoid, Boston Dynamics, Field AI, Fourier, Galbot, Mentee Robotics, Skild AI, Swiss-Mile, Unitree Robotics and XPENG Robotics.

Project GR00T: Foundations for General-Purpose Humanoid Robots

Building advanced humanoids is extremely difficult, demanding multilayer technological and interdisciplinary approaches to make the robots perceive, move and learn skills effectively for human-robot and robot-environment interactions.

Project GR00T is an initiative to develop accelerated libraries, foundation models and data pipelines to accelerate the global humanoid robot developer ecosystem.

Six new Project GR00T workflows provide humanoid developers with blueprints to realize the most challenging humanoid robot capabilities. They include:

- GR00T-Gen for building generative AI-powered, OpenUSD-based 3D environments

- GR00T-Mimic for robot motion and trajectory generation

- GR00T-Dexterity for robot dexterous manipulation

- GR00T-Control for whole-body control

- GR00T-Mobility for robot locomotion and navigation

- GR00T-Perception for multimodal sensing

“Humanoid robots are the next wave of embodied AI,” said Jim Fan, senior research manager of embodied AI at NVIDIA. “NVIDIA research and engineering teams are collaborating across the company and our developer ecosystem to build Project GR00T to help advance the progress and development of global humanoid robot developers.”

New Development Tools for World Model Builders

Today, robot developers are building world models — AI representations of the world that can predict how objects and environments respond to a robot’s actions. Building these world models is incredibly compute- and data-intensive, with models requiring thousands of hours of real-world, curated image or video data.

NVIDIA Cosmos tokenizers provide efficient, high-quality encoding and decoding to simplify the development of these world models. They set a new standard of minimal distortion and temporal instability, enabling high-quality video and image reconstructions.

Providing high-quality compression and up to 12x faster visual reconstruction, the Cosmos tokenizer paves the path for scalable, robust and efficient development of generative applications across a broad spectrum of visual domains.

1X, a humanoid robot company, has updated the 1X World Model Challenge dataset to use the Cosmos tokenizer.

“NVIDIA Cosmos tokenizer achieves really high temporal and spatial compression of our data while still retaining visual fidelity,” said Eric Jang, vice president of AI at 1X Technologies. “This allows us to train world models with long horizon video generation in an even more compute-efficient manner.”

Other humanoid and general-purpose robot developers, including XPENG Robotics and Hillbot, are developing with the NVIDIA Cosmos tokenizer to manage high-resolution images and videos.

NeMo Curator now includes a video processing pipeline. This enables robot developers to improve their world-model accuracy by processing large-scale text, image and video data.

Curating video data poses challenges due to its massive size, requiring scalable pipelines and efficient orchestration for load balancing across GPUs. Additionally, models for filtering, captioning and embedding need optimization to maximize throughput.

NeMo Curator overcomes these challenges by streamlining data curation with automatic pipeline orchestration, reducing processing time significantly. It supports linear scaling across multi-node, multi-GPU systems, efficiently handling over 100 petabytes of data. This simplifies AI development, reduces costs and accelerates time to market.

Advancing the Robot Learning Community at CoRL

The nearly two dozen research papers the NVIDIA robotics team released with CoRL cover breakthroughs in integrating vision language models for improved environmental understanding and task execution, temporal robot navigation, developing long-horizon planning strategies for complex multistep tasks and using human demonstrations for skill acquisition.

Groundbreaking papers for humanoid robot control and synthetic data generation include SkillGen, a system based on synthetic data generation for training robots with minimal human demonstrations, and HOVER, a robot foundation model for controlling humanoid robot locomotion and manipulation.

NVIDIA researchers will also be participating in nine workshops at the conference. Learn more about the full schedule of events.

Availability

NVIDIA Isaac Lab 1.2 is available now and is open source on GitHub. NVIDIA Cosmos tokenizer is available now on GitHub and Hugging Face. NeMo Curator for video processing will be available at the end of the month.

The new NVIDIA Project GR00T workflows are coming soon to help robot companies build humanoid robot capabilities with greater ease. Read more about the workflows on the NVIDIA Technical Blog.

Researchers and developers learning to use Isaac Lab can now access developer guides and tutorials, including an Isaac Gym to Isaac Lab migration guide.

Discover the latest in robot learning and simulation in an upcoming OpenUSD insider livestream on robot simulation and learning on Nov. 13, and attend the NVIDIA Isaac Lab office hours for hands-on support and insights.

Developers can apply to join the NVIDIA Humanoid Robot Developer Program.