Undergraduates explore practical applications of artificial intelligence

SuperUROP scholars apply deep learning to improve accuracy of climate models, profitably match computers in the cloud with customers, and more.

Deep neural networks excel at finding patterns in datasets too vast for the human brain to pick apart. That ability has made deep learning indispensable to just about anyone who deals with data. This year, the MIT Quest for Intelligence and the MIT-IBM Watson AI Lab sponsored 17 undergraduates to work with faculty on yearlong research projects through MIT’s Advanced Undergraduate Research Opportunities Program (SuperUROP).

Students got to explore AI applications in climate science, finance, cybersecurity, and natural language processing, among other fields. And faculty got to work with students from outside their departments, an experience they describe in glowing terms. “Adeline is a shining testament of the value of the UROP program,” says Raffaele Ferrari, a professor in MIT’s Department of Earth and Planetary Sciences, of his advisee. “Without UROP, an oceanography professor might have never had the opportunity to collaborate with a student in computer science.”

Highlighted below are four SuperUROP projects from this past year.

A faster algorithm to manage cloud-computing jobs

The shift from desktop computing to far-flung data centers in the “cloud” has created bottlenecks for companies selling computing services. Faced with a constant flux of orders and cancellations, their profits depend heavily on efficiently pairing machines with customers.

Approximation algorithms are used to carry out this feat of optimization. Among all the possible ways of assigning machines to customers by price and other criteria, they find a schedule that achieves near-optimal profit. For the last year, junior Spencer Compton worked on a virtual whiteboard with MIT Professor Ronitt Rubinfeld and postdoc Slobodan Mitrović to find a faster scheduling method.

“We didn’t write any code,” he says. “We wrote proofs and used mathematical ideas to find a more efficient way to solve this optimization problem. The same ideas that improve cloud-computing scheduling can be used to assign flight crews to planes, among other tasks.”

In a pre-print paper on arXiv, Compton and his co-authors show how to speed up an approximation algorithm under dynamic conditions. They also show how to locate machines assigned to individual customers without computing the entire schedule.

A big challenge was finding the crux of the project, he says. “There’s a lot of literature out there, and a lot of people who have thought about related problems. It was fun to look at everything that’s been done and brainstorm to see where we could make an impact.”

How much heat and carbon can the oceans absorb?

Earth’s oceans regulate climate by drawing down excess heat and carbon dioxide from the air. But as the oceans warm, it’s unclear if they will soak up as much carbon as they do now. A slowed uptake could bring about more warming than what today’s climate models predict. It’s one of the big questions facing climate modelers as they try to refine their predictions for the future.

The biggest obstacle in their way is the complexity of the problem: today’s global climate models lack the computing power to get a high-resolution view of the dynamics influencing key variables like sea-surface temperatures. To compensate for the lost accuracy, researchers are building surrogate models to approximate the missing dynamics without explicitly solving for them.

In a project with MIT Professor Raffaele Ferrari and research scientist Andre Souza, MIT junior Adeline Hillier is exploring how deep learning solutions can be used to improve or replace physical models of the uppermost layer of ocean, which drives the rate of heat and carbon uptake. “If the model has a small footprint and succeeds under many of the physical conditions encountered in the real world, it could be incorporated into a global climate model and hopefully improve climate projections,” she says.

In the course of the project, Hillier learned how to code in the programming language Julia. She also got a crash course in fluid dynamics. “You’re trying to model the effects of turbulent dynamics in the ocean,” she says. “It helps to know what the processes and physics behind them look like.”

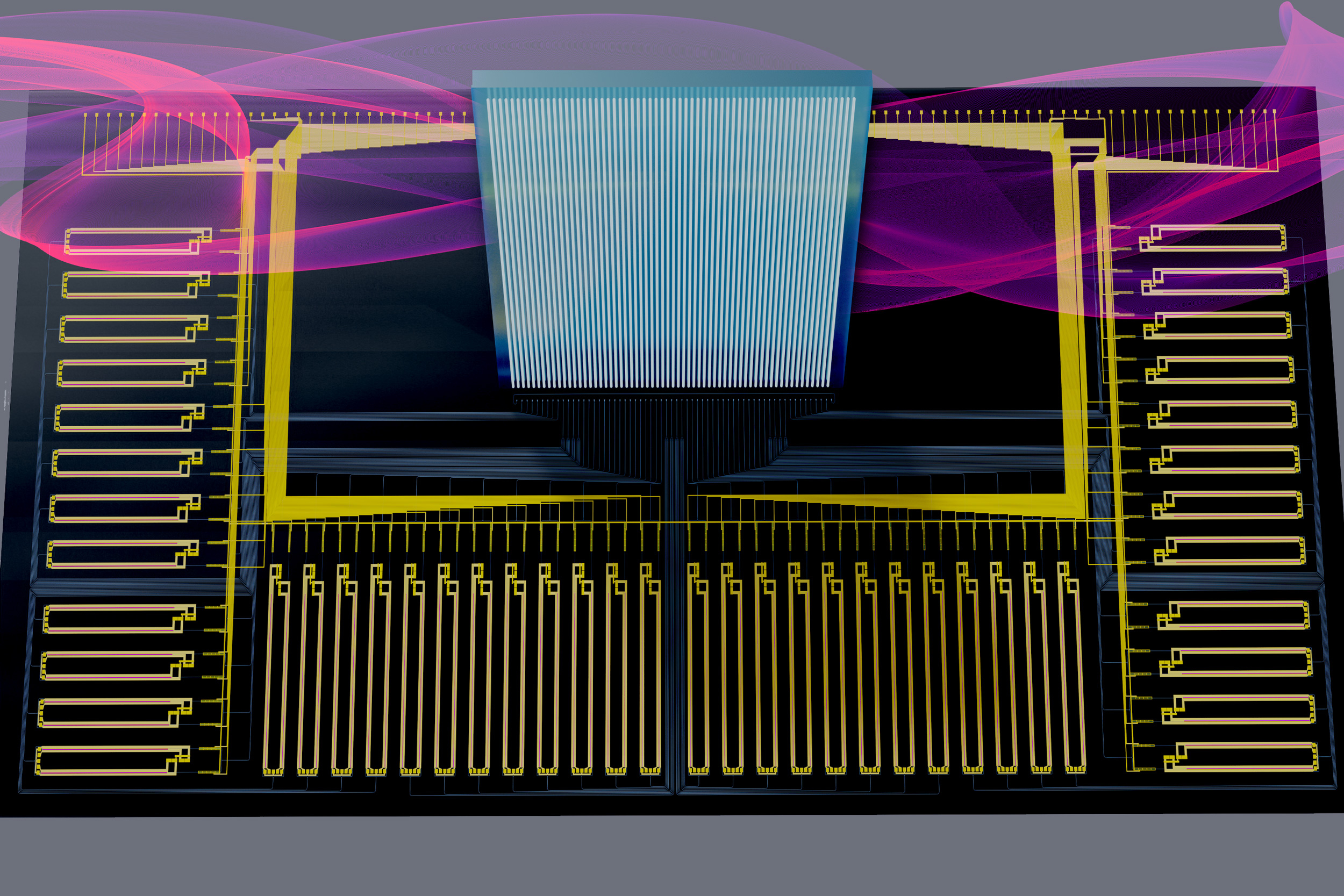

In search of more efficient deep learning models

There are thousands of ways to design a deep learning model to solve a given task. Automating the design process promises to narrow the options and make these tools more accessible. But finding the optimal architecture is anything but simple. Most automated searches pick the model that maximizes validation accuracy without considering the structure of the underlying data, which may suggest a simpler, more robust solution. As a result, more reliable or data-efficient architectures are passed over.

“Instead of looking at the accuracy of the model alone, we should focus on the structure of the data,” says MIT senior Kristian Georgiev. In a project with MIT Professor Asu Ozdaglar and graduate student Alireza Fallah, Georgiev is looking at ways to automatically query the data to find the model that best suits its constraints. “If you choose your architecture based on the data, you’re more likely to get a good and robust solution from a learning theory perspective,” he says.

The hardest part of the project was the exploratory phase at the start, he says. To find a good research question he read through papers ranging from topics in autoML to representation theory. But it was worth it, he says, to be able to work at the intersection of optimization and generalization. “To make good progress in machine learning you need to combine both of these fields.”

What makes humans so good at recognizing faces?

Face recognition comes easily to humans. Picking out familiar faces in a blurred or distorted photo is a cinch. But we don’t really understand why or how to replicate this superpower in machines. To home in on the principles important to recognizing faces, researchers have shown headshots to human subjects that are progressively degraded to see where recognition starts to break down. They are now performing similar experiments on computers to see if deeper insights can be gained

In a project with MIT Professor Pawan Sinha and the MIT Quest for Intelligence, junior Ashika Verma applied a set of filters to a dataset of celebrity photos. She blurred their faces, distorted them, and changed their color to see if a face-recognition model could pick out photos of the same face. She found that the model did best when the photos were either natural color or grayscale, consistent with the human studies. Accuracy slipped when a color filter was added, but not as much as it did for the human subjects — a wrinkle that Verma plans to investigate further.

The work is part of a broader effort to understand what makes humans so good at recognizing faces, and how machine vision might be improved as a result. It also ties in with Project Prakash, a nonprofit in India that treats blind children and tracks their recovery to learn more about the visual system and brain plasticity. “Running human experiments takes more time and resources than running computational experiments,” says Verma’s advisor, Kyle Keane, a researcher with MIT Quest. “We're trying to make AI as human-like as possible so we can run a lot of computational experiments to identify the most promising experiments to run on humans.”

Degrading the images to use in the experiments, and then running them through the deep nets, was a challenge, says Verma. “It’s very slow,” she says. “You work 20 minutes at a time and then you wait.” But working in a lab with an advisor made it worth it, she says. “It was fun to dip my toes into neuroscience.”

SuperUROP projects were funded, in part, by the MIT-IBM Watson AI Lab, MIT Quest Corporate, and by Eric Schmidt, technical advisor to Alphabet Inc., and his wife, Wendy.