Nvidia Market Cap Exceeds US$1 Trillion, an Early Winner in the AI Boom

While the current AI boom is only just getting started, an early winner is Nvidia, who – on Tuesday, May 30th – saw the company’s market capitalization exceed US$1 trillion for the first time. For a chip designer with no fabrication capabilities of its own, this is a significant moment. Hovering at around US$970 billion … Nvidia Market Cap Exceeds US$1 Trillion, an Early Winner in the AI Boom Read More + The post Nvidia Market Cap Exceeds US$1 Trillion, an Early Winner in the AI Boom appeared first on Edge AI and Vision Alliance.

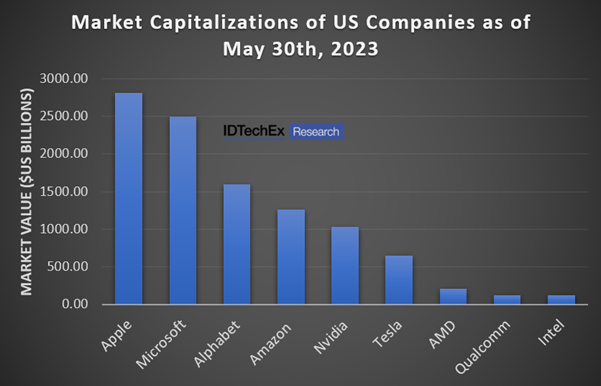

While the current AI boom is only just getting started, an early winner is Nvidia, who – on Tuesday, May 30th – saw the company’s market capitalization exceed US$1 trillion for the first time. For a chip designer with no fabrication capabilities of its own, this is a significant moment. Hovering at around US$970 billion as of 1st June, the momentary increase in share price saw Nvidia join an elite club occupied by only five other companies currently: Apple, Microsoft, Alphabet, Amazon, and Saudi Aramco. Previously, only three other companies (Tesla, Meta and PetroChina) have also crossed the US$1 trillion threshold.

Nvidia’s share price has increased roughly 170% since the beginning of the year, growth that has outpaced other members of the S&P 500 index. That growth is directly correlated to the increasing awareness and use of AI tools, and the potential for impact on business and consumers alike.

ChatGPT has been discussed in boardrooms and at the water cooler since it was released in November 2022. As of January 2023, just three months after its release, ChatGPT had registered 100 million users. The chatbot – which is built on a large language model consisting of 175 billion parameters – was trained using approximately 10,000 Nvidia A100 Graphics Processing Units (GPUs). Nvidia currently accounts for around 80% of all GPUs globally, where the use of these GPUs has been bolstered by AI and data mining (the parallel processing benefits of GPUs making them as useful for the training of AI algorithms as for cryptocurrency mining). Market research company IDTechEx recently published a reportthat forecasts Nvidia’s continued dominance not just on the GPU stage but more specifically as AI hardware leaders, with the company taking a considerable percentage of the forecast US$257 billion AI chip revenue as of 2033.

Presently, Nvidia generates more revenue from their data center and networking market segment (which includes data centre platforms as well as autonomous vehicle solutions and cryptocurrency mining processors) than from their graphics reporting segment. In FY2023, Nvidia generated US$15.01 billion in Data Center revenue, which accounted for 55.6% of the total revenue generated for the year. This presents an increase in Data Center revenue of 41% from 2022, where Nvidia has shown year-on-year growth in Data Center revenues of over 40% since 2020. Contrast this to other AI chip designers – such as AMD (who recently acquired Xilinx) and Qualcomm – and it is clear that Nvidia are establishing early dominance in the data center AI space.

The company is not resting on its laurels either. While the A100 is presently the most commonly used chip for AI purposes within data centers, Nvidia announced early this year the H100 GPU, based on their new Hopper architecture. The Hopper architecture is built in TSMC’s 4N process (an enhanced version of the 5 nm node), incorporating 80 billion transistors (the A100 has 54.2 billion transistors, made in a 7 nm process). With speedups ranging from 7X to 30X across training and inference when compared with the A100 – as well as a comparable thermal design power in the PCIe form factor – Nvidia will be supplying the key hardware necessary to run the increasingly complex AI algorithms of tomorrow.

And yet, while Nvidia acquires more of the data center processing market, there is still significant opportunity for chip designers at the edge, which is forecast to grow at a greater compound annual growth rate than for cloud AI over the next ten years, according to IDTechEx’s latest report on AI chips. AI at the edge has different requirements than in the cloud, chief among them the power consumption of chips due to the thermal capabilities of the devices in which they are embedded. As chips at the edge can typically consume no more than a few Watts, the complexity of the models that they run must be greatly simplified. A chip such as the A100, with its large footprint and transistor density, would be a waste; instead, companies need not design at the cutting-edge in terms of node processes and can instead opt to manufacture at more mature nodes, which have a lower price point (and therefore barrier to entry) than leading-edge nodes.

It is difficult to determine the precise location of the AI inflection point and how far in the future it is. While opinions may differ, there is no questioning that the AI boom is happening and that AI tools have the capacity to transform workflows across industry verticals. To learn more about the global AI chips market, including the technology developments, key players, and market prospects for AI-capable hardware, please refer to IDTechEx’s “AI Chips 2023-2033” report.

To find out more, including downloadable sample pages, please visit www.IDTechEx.com/AIChips.

About IDTechEx

IDTechEx guides your strategic business decisions through its Research, Subscription and Consultancy products, helping you profit from emerging technologies. For more information, contact research@IDTechEx.com or visit www.IDTechEx.com.

The post Nvidia Market Cap Exceeds US$1 Trillion, an Early Winner in the AI Boom appeared first on Edge AI and Vision Alliance.