How AI and Accelerated Computing Drive Energy Efficiency and Sustainability

AI isn’t just about building smarter machines. It’s about building a greener world. From optimizing energy use to reducing emissions, AI and accelerated computing are helping industries

AI isn’t just about building smarter machines. It’s about building a greener world.

From optimizing energy use to reducing emissions, AI and accelerated computing are helping industries tackle some of the world’s toughest environmental challenges.

As Joshua Parker, senior director of corporate sustainability at NVIDIA, explains in the latest episode of NVIDIA’s AI Podcast, these technologies are powering a new era of energy efficiency.

Parker, a seasoned sustainability professional with a background in law and engineering, has led sustainability initiatives at major companies like Western Digital, where he developed corporate sustainability strategies. His expertise spans corporate sustainability, intellectual property and environmental impact.

AI can, in fact, help reduce energy consumption. And it’s doing it in some surprising ways.

AI systems themselves use energy, of course, but the big story is how AI and accelerated computing are helping other systems save energy. Take data centers, for instance.

They’re the backbone of AI, housing the powerful systems that crunch the data needed for AI to work in services like chatbots, AI-powered search and content generation. Globally, data centers account for about 2% of total energy consumption, and AI-specific centers represent only a tiny fraction of that.

“AI still accounts for a tiny, tiny fraction of overall energy consumption globally,” Parker said. Yet, the potential for AI to optimize energy use is vast.

Despite this, AI’s real superpower lies in its ability to optimize.

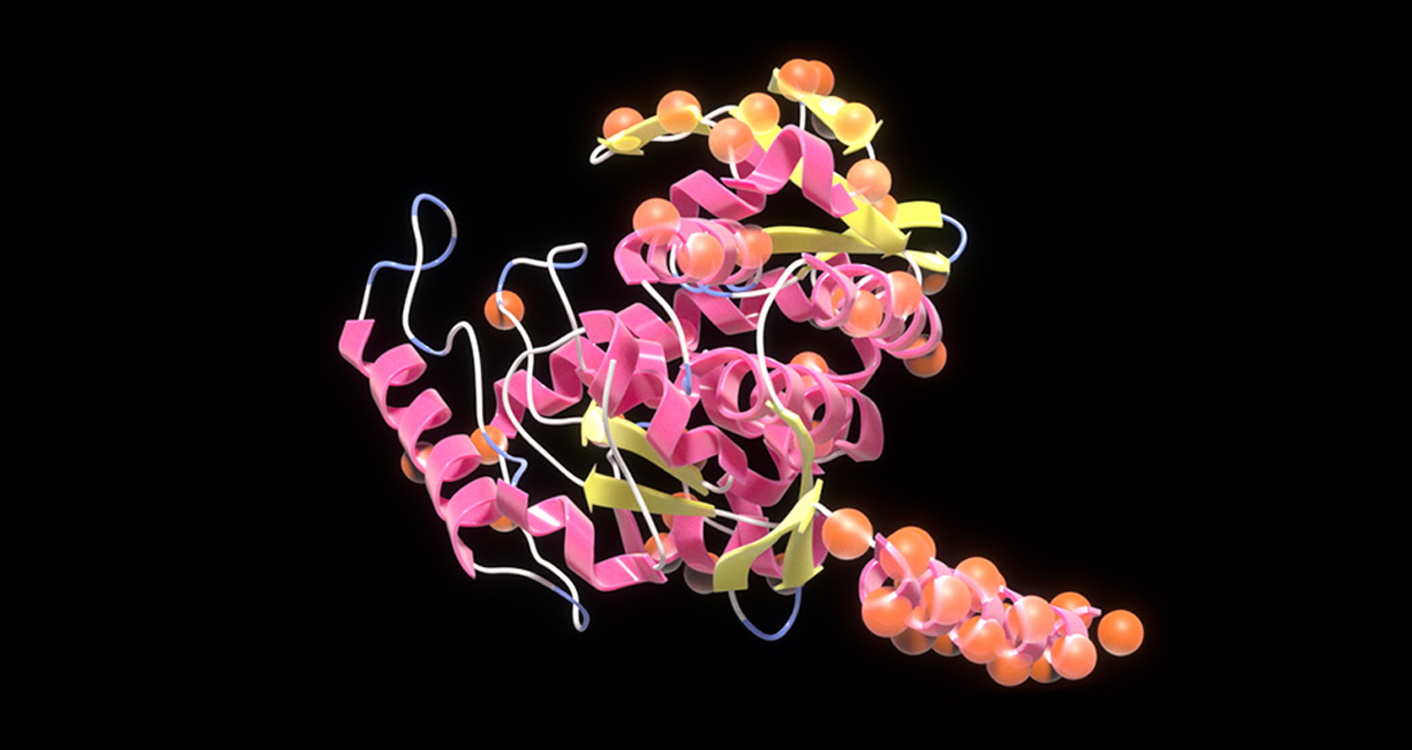

How? By using accelerated computing platforms that combine GPUs and CPUs. GPUs are designed to handle complex computations quickly and efficiently.

In fact, these systems can be up to 20x more energy-efficient than traditional CPU-only systems for AI inference and training, Parker notes.

This progress has contributed to massive gains in energy efficiency over the past eight years, which is part of the reason AI is now able to tackle increasingly complex problems.

What Is Accelerated Computing?

At its core, accelerated computing is about doing more with less.

It involves using specialized hardware — like GPUs — to perform tasks faster and with less energy.

This isn’t just theoretical. “The change in efficiency is really, really dramatic,” Parker emphasized.

“If you compare the energy efficiency for AI inference from eight years ago until today, [it’s] 45,000 times more energy efficient,” Parker said.

This matters because as AI becomes more widespread, the demand for computing power grows.

Accelerated computing helps companies scale their AI operations without consuming massive amounts of energy.

This energy efficiency is key to AI’s ability to tackle some of today’s biggest sustainability challenges.

AI in Action: Tackling Climate Change

AI isn’t just saving energy — it’s helping to fight climate change.

“AI and accelerated computing in general are game-changers when it comes to weather and climate modeling and simulation,” Parker said.

For instance, AI-enhanced weather forecasting is becoming more accurate, allowing industries and governments to prepare for climate-related events like hurricanes or floods, Parker explained.

The better we can predict these events, the better we can prepare for them, which means fewer resources wasted and less damage done.

Another key area is the rise of digital twins — virtual models of physical environments.

These AI-powered simulations allow companies to optimize energy consumption in real time, without having to make costly changes in the physical world. In one case, using a digital twin helped a company achieve a 10% reduction in energy use, Parker said.

That may sound small, but scale it across industries, and the impact is huge.

AI is also playing a crucial role in developing new materials for renewable energy technologies like solar panels and electric vehicles, helping accelerate the transition to clean energy.

Can AI Make Data Centers More Sustainable?

AI needs data centers to operate, and, as AI grows, so does the demand for computing power. But data centers don’t have to be energy hogs. In fact, they can be part of the sustainability solution.

One major innovation is direct-to-chip liquid cooling. This technology allows data centers to cool their systems much more efficiently than traditional air conditioning methods, which are often energy-intensive.

“Our recommended design for the data centers for our new B200 chip is focused all on direct-to-chip liquid cooling,” Parker explained. By cooling directly at the chip level, this method saves energy, helping data centers stay cool without guzzling power.

As AI scales up, the future of data centers will depend on designing for energy efficiency from the ground up.

That means integrating renewable energy, using energy storage solutions and continuing to innovate with cooling technologies.

The goal is to create green data centers that can meet the world’s growing demand for compute power without increasing their carbon footprint.

“The compute density is so high that it makes more sense to invest in the cooling because you’re getting so much more compute for that same single direct-to-chip cooling element,” Parker said.

The Role of AI in Building a Sustainable Future

AI is not just a tool for optimizing systems — it’s a driver of sustainable innovation.

From improving the efficiency of energy grids to enhancing supply chain logistics, AI is leading the charge in reducing waste and emissions.

“AI, I firmly believe, is going to be the best tool that we’ve ever seen to help us achieve more sustainability and more sustainable outcomes,” Parker said.

Register for the NVIDIA AI Summit DC to explore how AI and accelerated computing are shaping the future of energy efficiency and climate solutions.