European Values Confront AI Innovation in EU’s Proposed AI Act

By John P. Desmond, AI Trends Editor The European Commission on Wednesday released proposed regulations governing the use of AI, a first-of-a-kind proposed legal framework

By John P. Desmond, AI Trends Editor

The European Commission on Wednesday released proposed regulations governing the use of AI, a first-of-a-kind proposed legal framework called the Artificial Intelligence Act, outlining acceptable and unacceptable practices around use of the innovative technology.

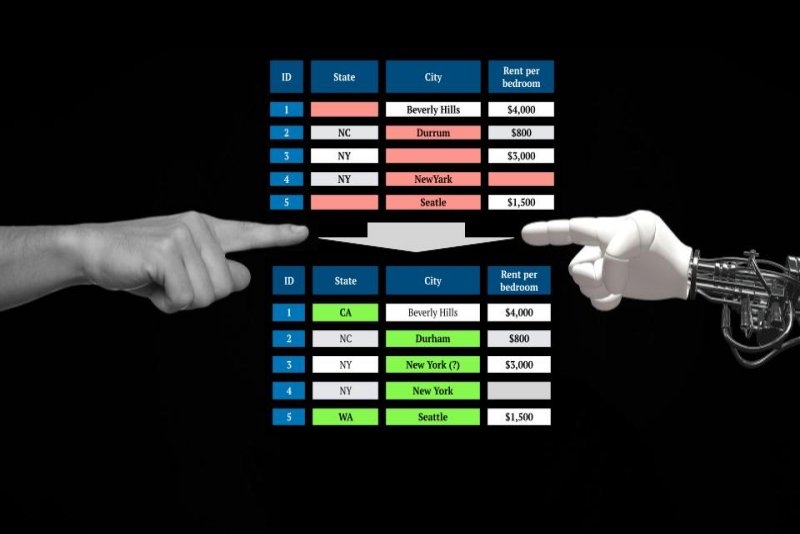

The draft rules would set limits around the use of AI in a range of activities, from self-driving cars to hiring decisions, bank lending, school enrollment selections, and the scoring of exams, according to an account in The New York Times. The rules would also cover the use of AI by law enforcement and court systems, areas considered “high risk” for their potential impact on public safety and fundamental rights.

Some uses would be banned, such as live facial recognition in public spaces, with exceptions for national security and some other purposes. The draft proposal of 108 pages has far-reaching implications for big tech companies including Google, Facebook, Microsoft, and Amazon, who have all invested substantially into AI development. Scores of other companies use AI to develop medicine, underwrite insurance policies and just credit worthiness. Governments are using AI in criminal justice and to allocate public services such as income support.

Penalties for violating the new regulations, which are likely to take several years to move through the European Union process for approval, could face fines of up to 6% of global sales.

“On artificial intelligence, trust is a must, not a nice to have,” stated Margrethe Vestager, the European Commission executive vice president who oversees digital policy for the 27-nation bloc. “With these landmark rules, the EU is spearheading the development of new global norms to make sure AI can be trusted.”

The European Union for the past decade has been the world’s most aggressive watchdog of the tech industry, with its policies often becoming blueprints for other nations. The General Data Protection Regulation (GDPR), for example, went into effect in May 2018 and has had a far-reaching effect as a data privacy regulation.

Reaction from US Big Tech Trickling In

Reaction from Silicon Valley is just beginning to take shape.

“The question that every firm in Silicon Valley will be asking today is: Should we remove Europe from our maps or not?” stated Andre Franca, the director of applied data science at CausaLens, a British AI startup, in an account in Fortune.

Anu Bradford, a law professor at Columbia University and author of the book The Brussels Effect, about how the EU has used its regulatory power, stated, “I think there are likely to be instances where this will be the global standard.”

The Commission does not want European companies to be at a disadvantage in relation to US or Chinese competitors, but their members have issues with now the leading American and Chinese companies have established their positions. They see the gathering of vast volumes of personal data and how AI is being deployed as trampling on individual rights and civil liberties that Europe has sought to protect, Bradford told Fortune. “They actually want to have this global impact,” Bradford stated about the Commission.

American technology companies and their representatives are not speaking highly so far of the EU’s proposed AI Act.

The act is “a damaging blow to the Commission’s goal of turning the EU into a global A.I. leader,” stated Benjamin Mueller, a senior policy analyst at the Center for Data Innovation of Washington, DC, industry consultants funded indirectly by US tech companies, to Fortune.

The proposed act would create “a thicket of new rules that will hamstring companies hoping to build and use AI in Europe,” causing European companies to fall behind, he suggested.

A different tack was taken by the Computer & Communications Industry Association, also in Washington, DC, which advocates for a range of technology firms. “We are encouraged by the EU’s risk-based approach to ensure that Europeans can trust and will benefit from AI solutions,” stated Christian Borggreen, the association’s vice president, in a statement. He cautioned that the regulations would need more clarity and that “regulation alone will not make the EU a leader in AI.”

AI Board Would Oversee How EU AI Act is Implemented

The EU’s AI Act also proposes a European Artificial Intelligence Board, made up of regulators from each member state, as well as the European Data Protection Supervisor, to oversee harmony in how the law is implemented. The board would also, according to Fortune, be responsible for recommending which AI use cases should be deemed “high-risk.”

Examples of high-risk use cases include: critical infrastructure that puts individual life and health at risk; models that determine access to education or professional training; worker management; access to financial services such as loans; law enforcement; and migration and border control. Models in those high-risk areas would need to undergo a risk assessment and take steps to mitigate dangers.

Daniel Leufer, a European policy analyst for Access Now, a non-profit focused on digital rights of individuals, said in an account from BBC News that the proposed AI Law is vague in many areas. “How do we determine what is to somebody’s detriment? And who assesses this?” he stated in a tweet.

Leufer suggested the AI Act should be expanded to include all public sector AI systems, whatever their risk level. “This is because people typically do not have a choice about whether or not to interact with an AI system in the public sector,” he stated.

Herbert Swaniker, a lawyer at Clifford Chance, an international law firm based in London, stated the proposed law “will require a fundamental shift in how AI is designed” for suppliers of AI products and services.

Social scoring systems such as those used in China that rate the trustworthiness of individuals and businesses would be classified as contrary to ‘Union values” and be banned, according to an account from Politico.

AI Use by the Military Exempted

The proposal would also ban AI systems used for mass surveillance or that cause harm to people by manipulating their behavior. Military systems would be exempt from the AI Act, as would technology for fighting serious crime, or facial recognition if being used to find terrorists. One critic said the exceptions make the proposal subject to much interpretation.

“Giving discretion to national authorities to decide which use cases to permit or not simply recreates the loopholes and gray areas that we already have under current legislation and which have led to widespread harm and abuse,” stated Ella Jakubowska of digital rights group EDRi, in the account from Politico.

An earlier Politico interview with Eric Schmidt, former CEO of Google and chair of the US National Security Commission on AI, presaged the confrontation of European values with American AI innovations. Europe’s strategy, Schmidt suggested, will not be successful because Europe is “simply not big enough” to compete.

“Europe will need to partner with the United States on these key platforms,” Schmidt stated, referring to American big tech companies which dominate the development of AI technologies.

Read the source articles and information in The New York Times, in Fortune, from BBC News and from Politico. Read the draft proposal of the EU’s AI Act.